This is a database of photos and accompanying information that can be used for vision experiments, especially on search, as well as the training and testing of computer vision algorithms. It contains 3 versions of 130 scenes where a particular target object is in a more expected position, a more unexpected position, or missing. Additionally, the target objects associated to each scene are characterized by photographs from 80 viewpoints at three vertical angles (240 photos per object). Our database combines the following properties:

- each target object photographed individually from multiple views, and at two different positions (expected and unexpected) within a particular scene (for which there is also version without the target object)

- target objects were actually photographed in the scenes, not pasted there by graphics editing, avoiding artifacts such as wrong shading, lighting, or pose errors

- scene photos were taken under controlled conditions with respect to photographic parameters and layout

- all scene and target photos were corrected for optical artifacts and distortions

- high-resolution pixel-level segmentations of the target objects in the two corresponding scenes

- semantic information on local scene context of the target object

- experimentally obtained contextual prior maps of each scene, in which the likelihood of finding the target object at a particular location is quantified

- meta-information on size of target object in the scene, distance of target object to image center, contextual prior value and saliency of the target object within the scene

In the following we provide an overview on the different components of our database. For a detailed description, see our paper [1].

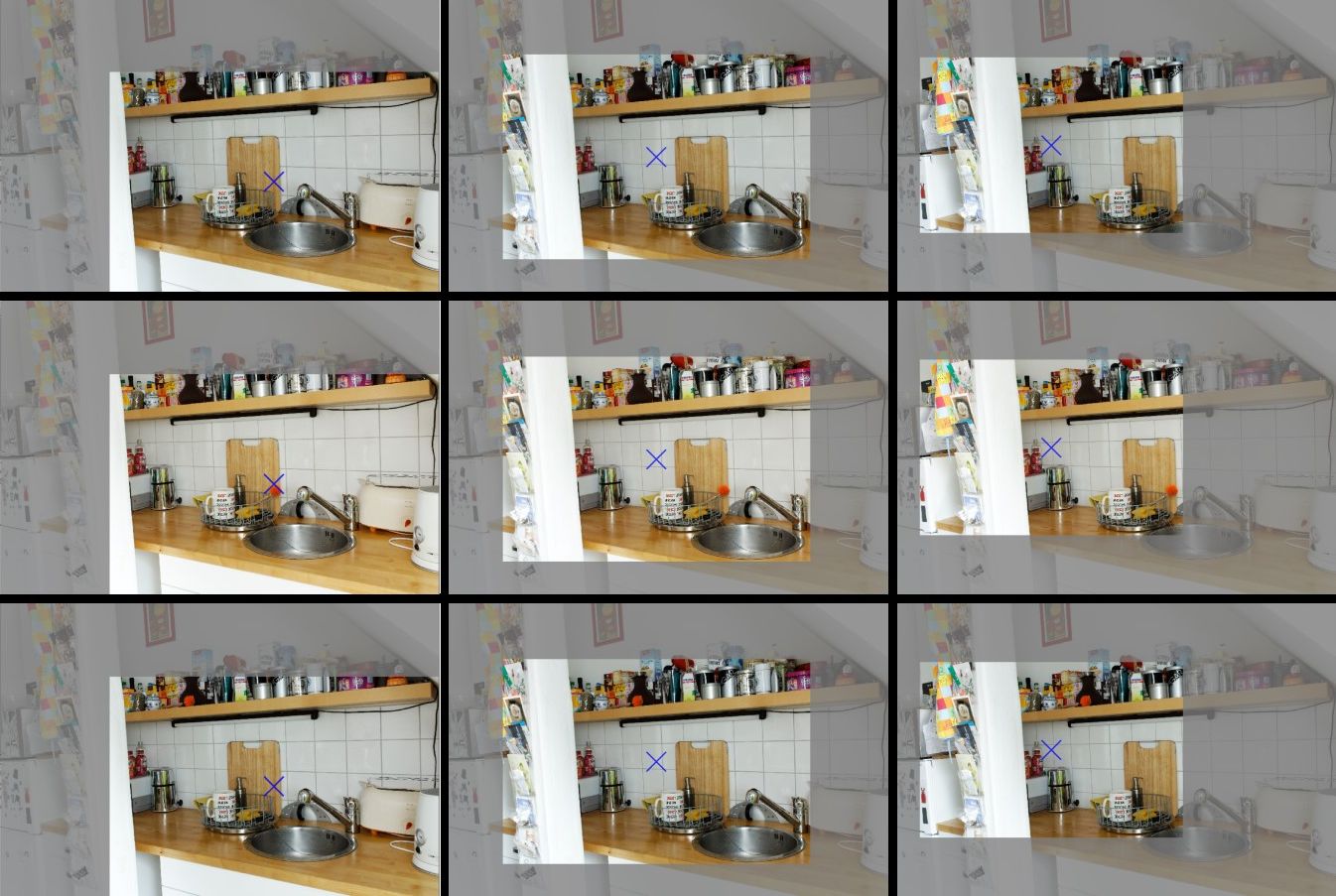

Scenes

Photos of 130 cluttered everyday scenes were taken at a resolution of 4272 x 2848 pixels. One particular object per scene was chosen as target object, and its position was modulated. Three version of each scene were photographed, one in which the target object was at an expected location (high contextual prior), one in which it was in an unexpected location (low contextual prior), and one where the target object was missing. In the example below, the target object is an egg.

Segmentation Masks

For the version of the scenes where the target objects are missing, binary segmentation masks are provided at high resolution. During the analysis of vision experiments these can be used to extract certain image features of map values at the location of the target objects, such as saliency or contextual prior values. It also provides a ground truth for target object classification and pixel labelling in computer vision.

Target Objects

All 130 target objects have a varying absolute size, ranging from a few millimetres up to about 60 centimetres, such as a coin, a pair of scissors, a cooking pot, or a tire of a car.

Each target object is characterized by 240 views: 3 vertical angles, 80 rotation angles (in steps of 4.5°). These can for example be used to present different views of the target object to prime subjects in a search experiments, or to build target object models in computer vision. The images were down-sampled to a resolution of 1152 x 768 pixels to make them available online.

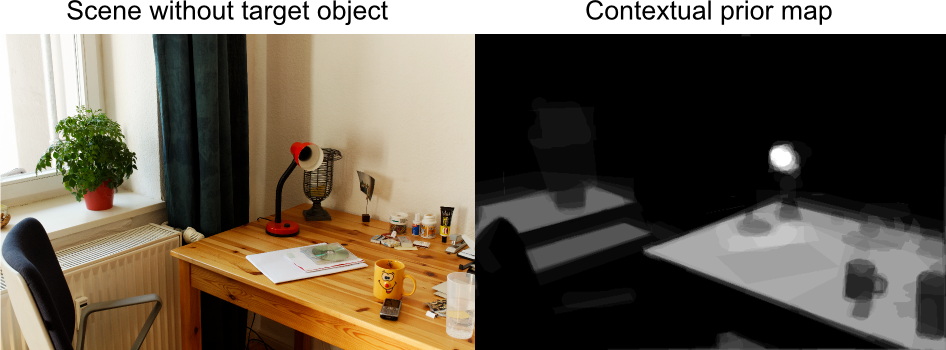

Contextual prior maps

Contextual prior maps can be used to quantify the contextual prior of finding the target object at one particular location in the scene. The maps were obtained by asking 10 subjects to do a dense pixel labelling of each scene where the target object was missing. Labels reflected the likelihood of finding the target object at one particular location and were transformed to numerical values (very likely=1.0, likely=0.66 unlikely=0.33 and highly unlikely=0.0). Individual labellings were averaged and saved as image. The graylevels of these images encode the contectual prior maps ranging from black (highly unlikely) to white (very likely). In order to retain the maps for a quantitative analysis, the images need to be re-normalized such that the sum over all pixels is a constant value.

Meta information

The size of the target object its to the image center was measured for each scene image in pixels. Contextual prior values as well as various bottom-up saliency measures [2] were calculated for the target object at both expected and unexpected locations in the corresponding scene.

References

[1] Johannes Mohr*, Julia Seyfarth*, Andreas Lueschow, Joachim E. Weber, Felix A. Wichmann, Klaus Obermayer (2016). BOiS - Berlin Object in Scene Database: Controlled Photographic Images for Visual Search Experiments with Quantified Contextual Priors. Front. Psychol. 7:749. doi: 10.3389/fpsyg.2016.00749

[2] Laurent Itti, Christof Koch, Ernst Niebur. A Model of Saliency-Based Visual Attention for Rapid Scene Analysis (1998). IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 20, no. 11, pp. 1254-1259.

*equal contribution